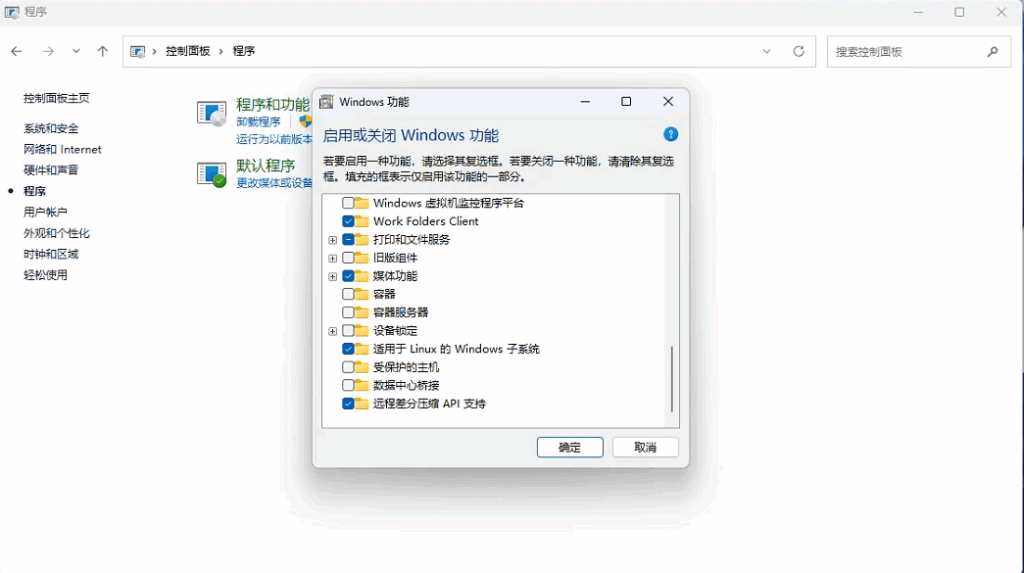

安装wsl

控制面板 -> 程序 ->启用功能

勾选适用于Linux的Windows子系统

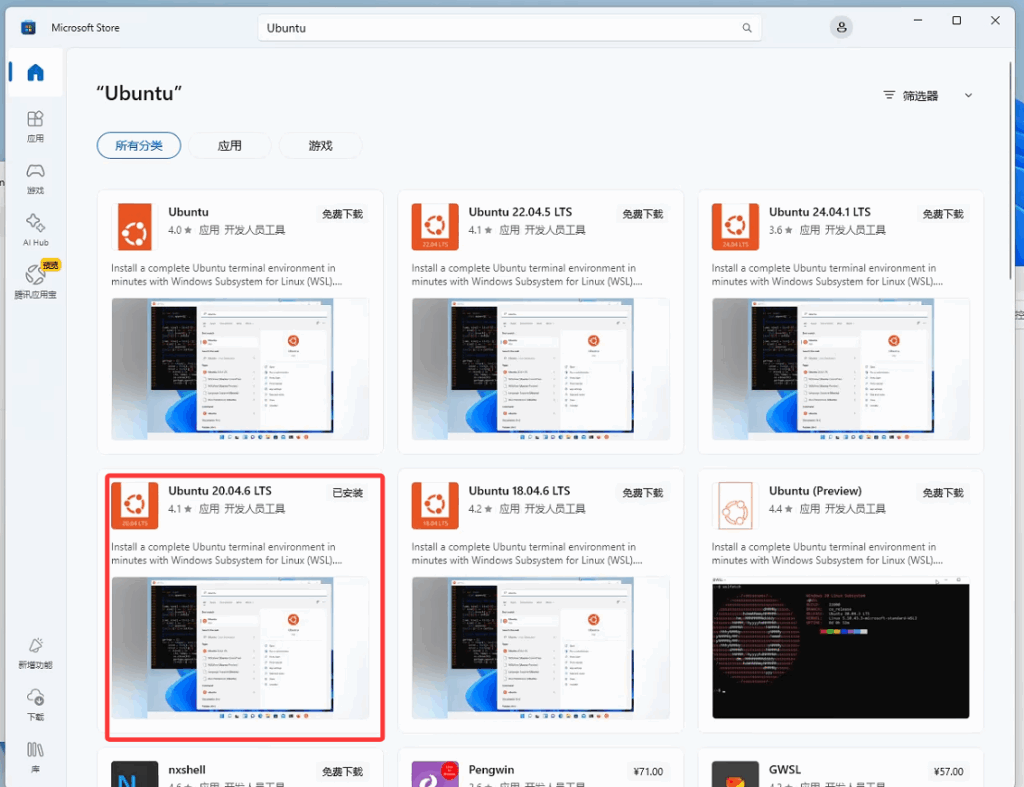

打开powershell运行

wsl.exe --install安装同时可以在Microsoft Store中搜索Ubuntu并下载20.04.06版本

初始化ubuntu

等待安装结束后,在开始菜单中搜索ubuntu点击进入

首次进入需要设置账户密码,请自行设定

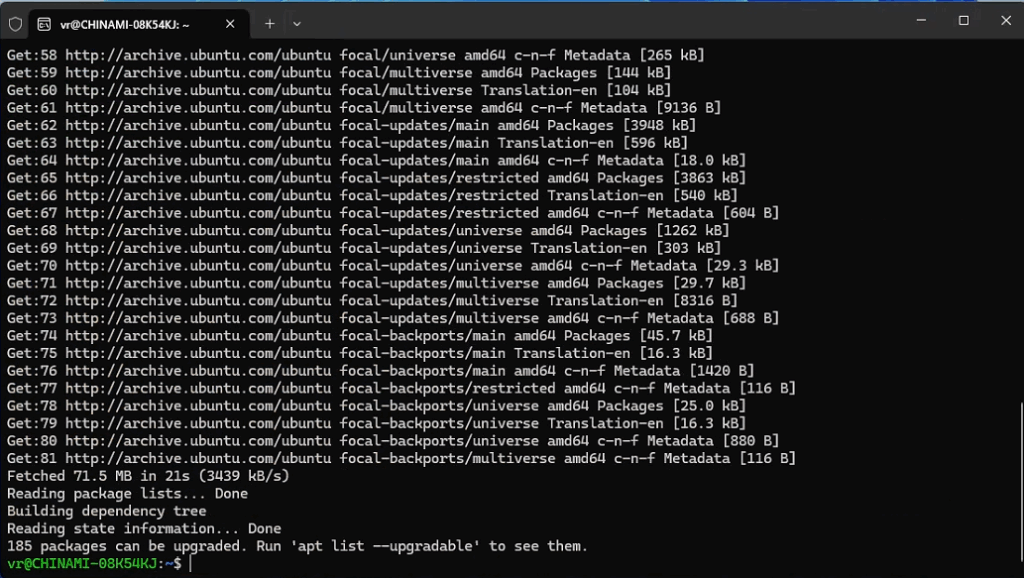

apt换源

编辑

sudo nano /etc/apt/sources.list在文件最前面添加如下部分

deb http://mirrors.aliyun.com/ubuntu/ focal main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ focal-security main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ focal-updates main restricted universe multiverse ctrl + x 输入y保存修改

运行如下命令更新apt源

sudo apt update

安装gcc

sudo apt install gcc安装git

sudo apt install git安装 ninja

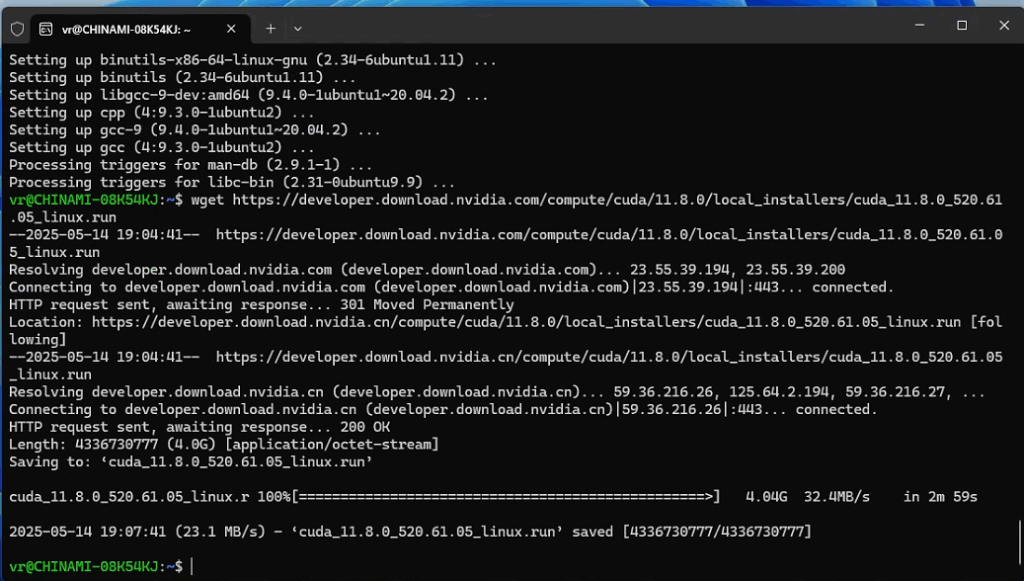

sudo apt-get install ninja-build安装cuda

umabma环境要求cuda11.8

wget https://developer.download.nvidia.com/compute/cuda/11.8.0/local_installers/cuda_11.8.0_520.61.05_linux.run

下载完成后运行

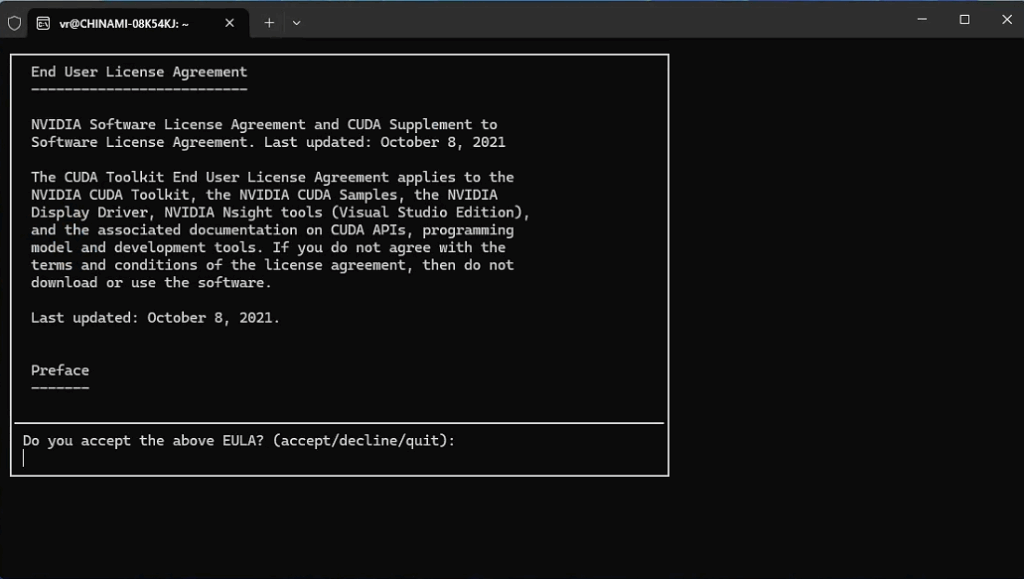

sudo sh cuda_11.8.0_520.61.05_linux.run等待license弹出输入accept

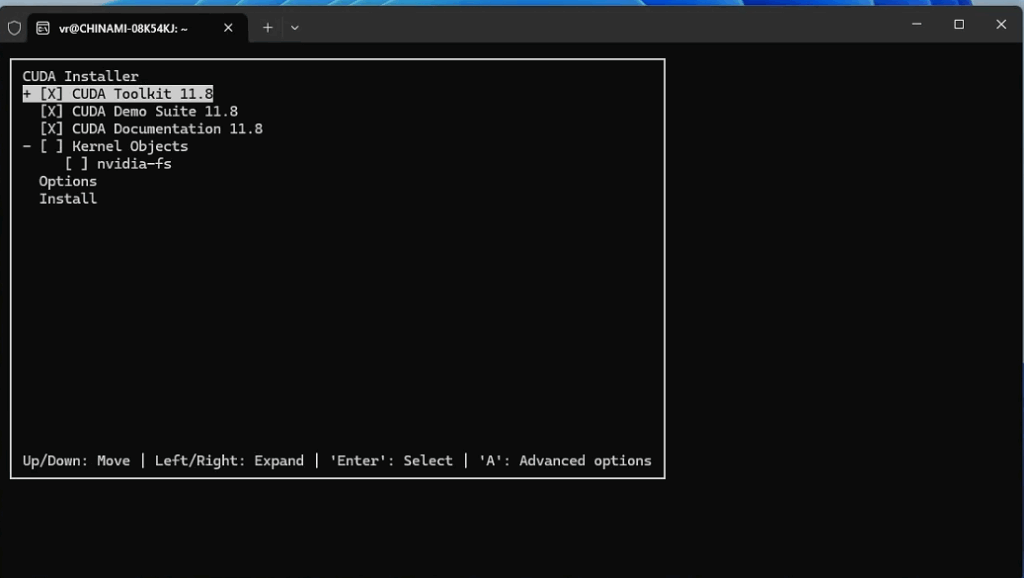

如有driver不选(按空格取消选中)

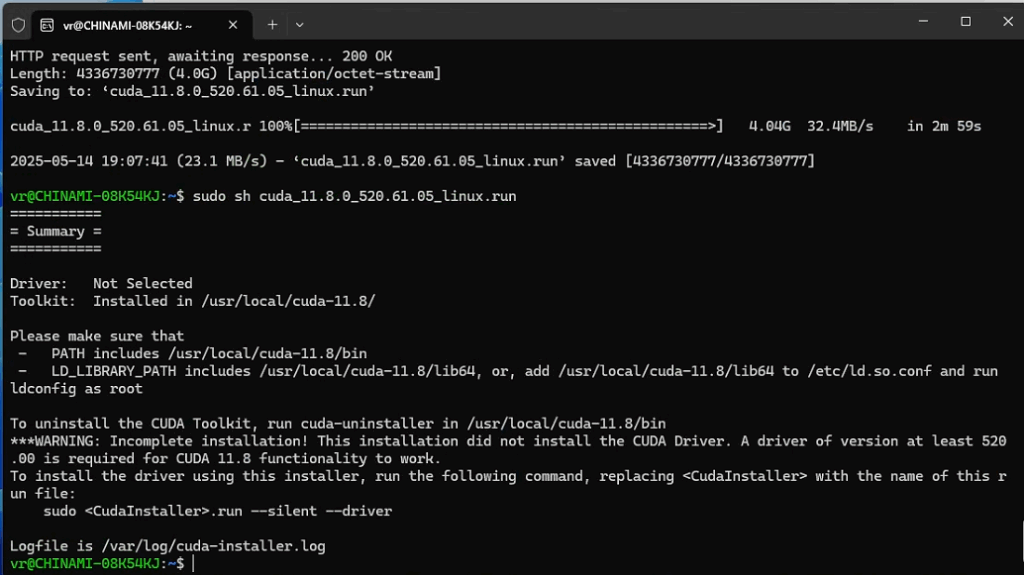

选中install等待安装完成,输出类似如下结果则成功安装

安装cudnn(可选)

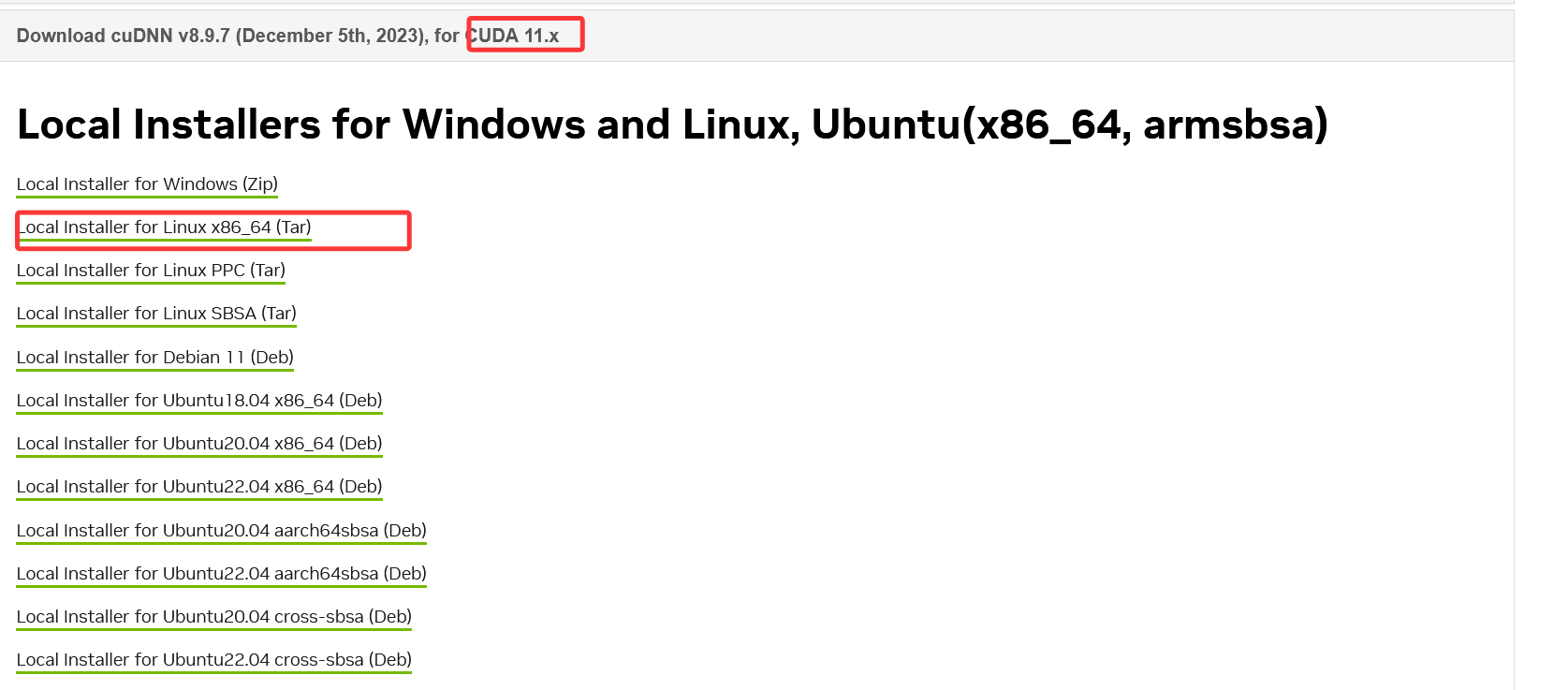

进入cudnn下载地址cuDNN Archive | NVIDIA Developer

找到cudnn for cuda 11.x 并下载Local Installer for Linux x86_64 (Tar)

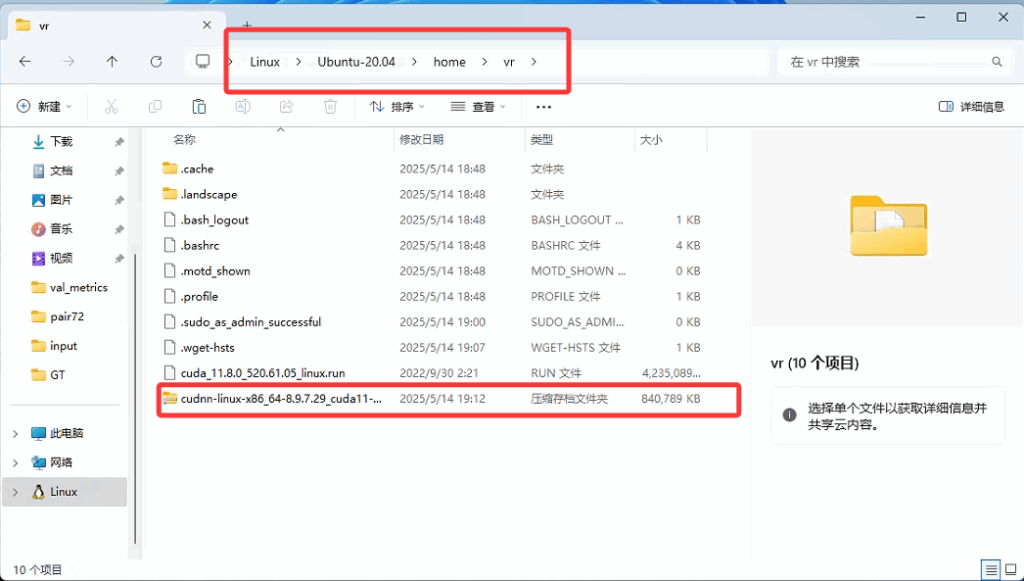

下载结束之后打开资源管理器(计算机)找到linux,进入ubuntu,将其放到 home/{username}/ [username代指你的用户名]文件夹下

解压cudnn压缩包

tar Jxvf ~/cudnn-linux-x86_64-8.9.7.29_cuda11-archive.tar.xz复制文件到cuda目录,并赋予权限

sudo cp ~/cudnn-linux-x86_64-8.9.7.29_cuda11-archive/include/cudnn.h /usr/local/cuda-11.8/include

sudo cp ~/cudnn-linux-x86_64-8.9.7.29_cuda11-archive/lib/libcudnn* /usr/local/cuda-11.8/lib64

sudo chmod a+r /usr/local/cuda-11.8/include/cudnn.h

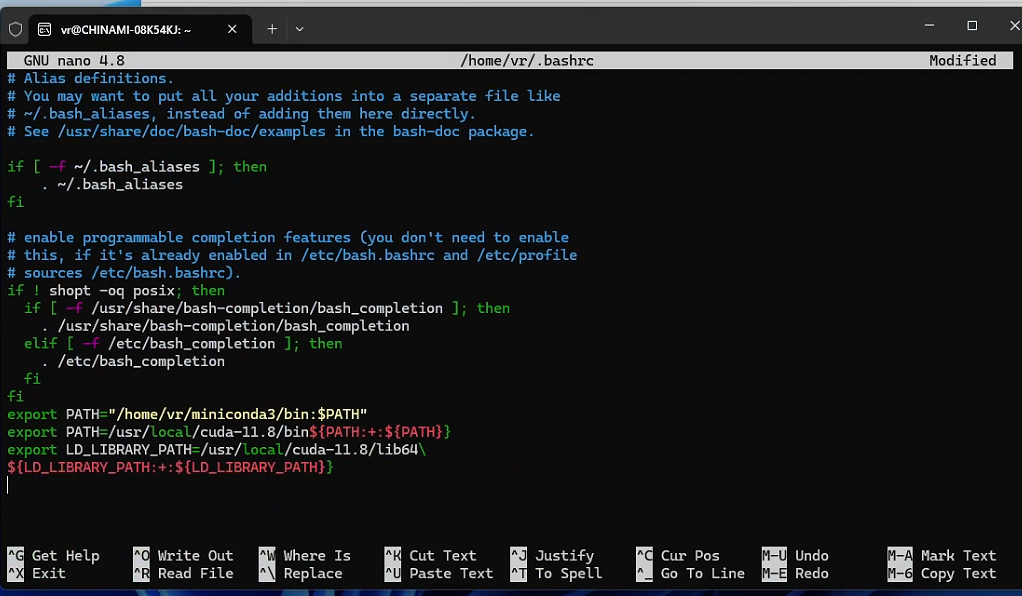

sudo chmod a+r /usr/local/cuda-11.8/lib64/libcudnn*配置环境变量

nano ~/.bashrc在文件末尾添加如下

export PATH=/usr/local/cuda-11.8/bin${PATH:+:${PATH}}

export LD_LIBRARY_PATH=/usr/local/cuda-11.8/lib64\

${LD_LIBRARY_PATH:+:${LD_LIBRARY_PATH}}ctrl+x输入y保持,并运行如下命令更新变量

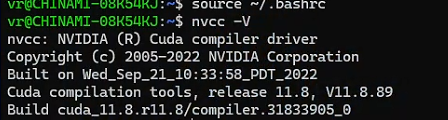

source ~/.bashrc检验cuda安装

输入 nvcc -V 得到如下输出则成功

安装MiniConda

下载安装脚本

wget https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.sh运行脚本

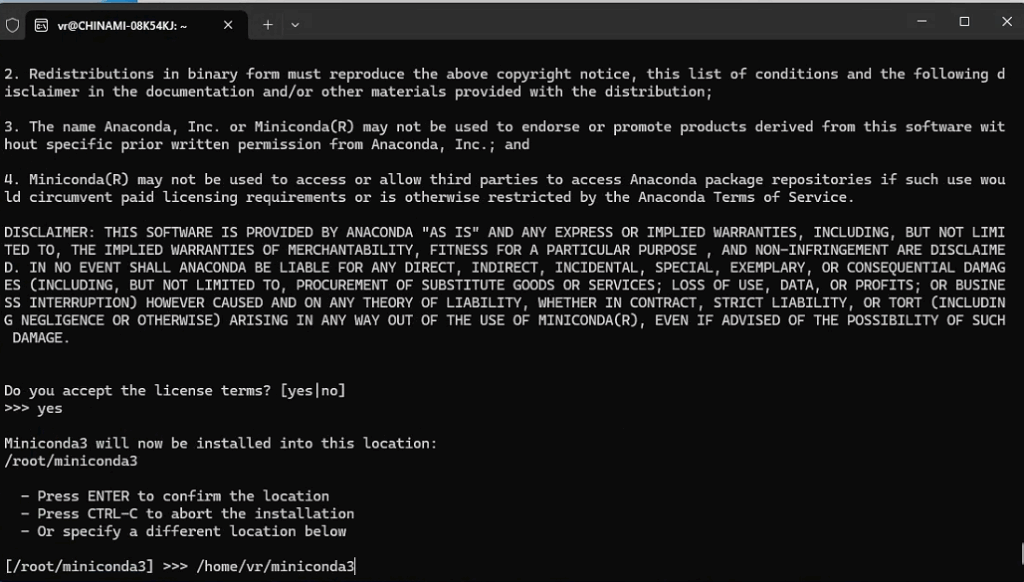

sudo sh ./Miniconda3-latest-Linux-x86_64.sh同意协议后输入 /home/{username}/miniconda3 然后回车

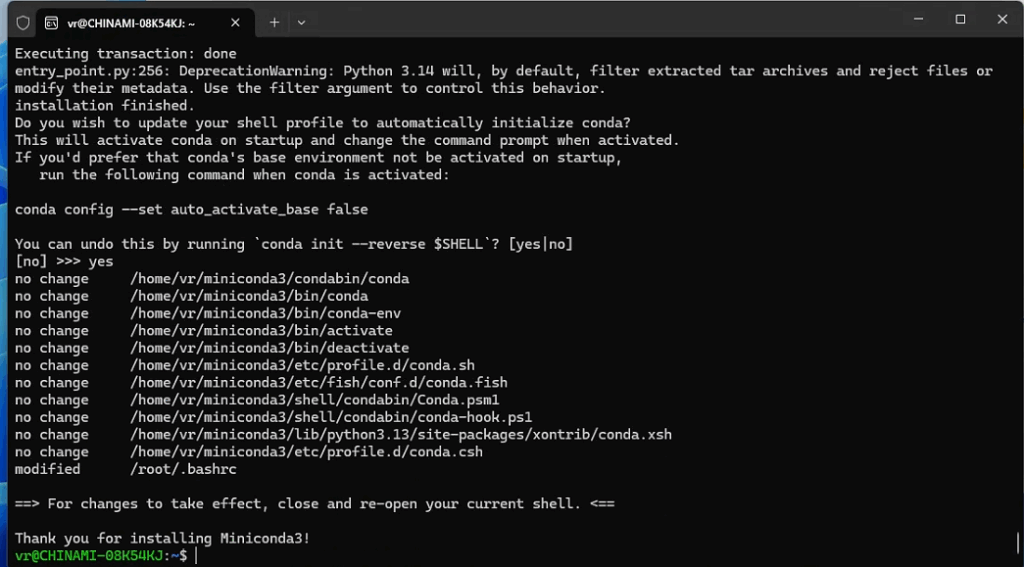

输入yes安装完成

配置环境变量

nano ~/.bashrc在文件末尾添加如下

export PATH="/home/{username}/miniconda3/bin:$PATH"ctrl+x输入y保持,并运行如下命令更新变量

source ~/.bashrc

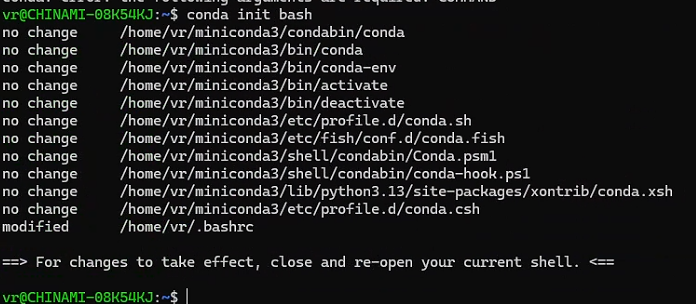

输入conda正确输出则配置成功,运行下命令初始化conda

conda init bash

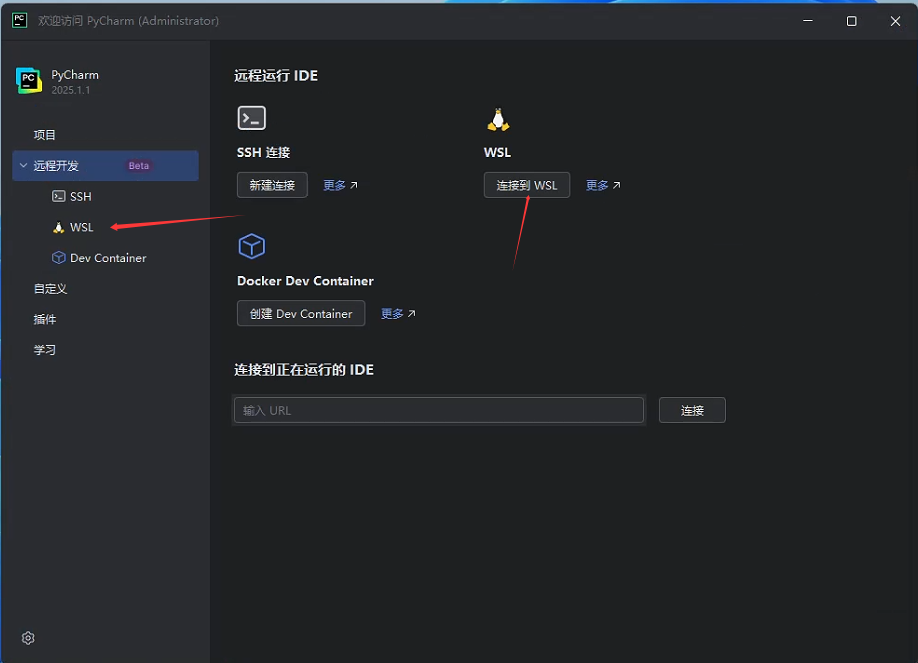

在windows上安装pycharm用于代码编辑

版本需要支持wsl功能,此处不细讲

配置U-Mabma

新开一个ubuntu终端,创建code目录并进入

mkdir code && cd code克隆仓库

git clone https://github.com/bowang-lab/U-Mamba.git使用pycharm打开

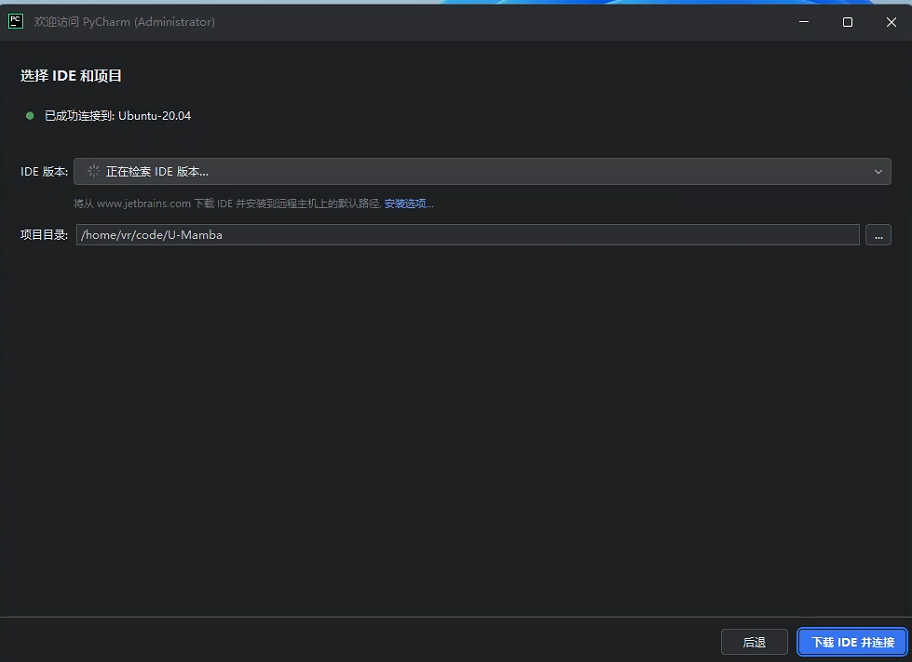

连接ubuntu20.04并选中刚刚克隆的目录

点击下载并连接,等待下载结束,打开项目后打开终端,按照README.md初始化项目

- 创建虚拟环境

conda create -n umamba python=3.10 -y - 进入虚拟环境

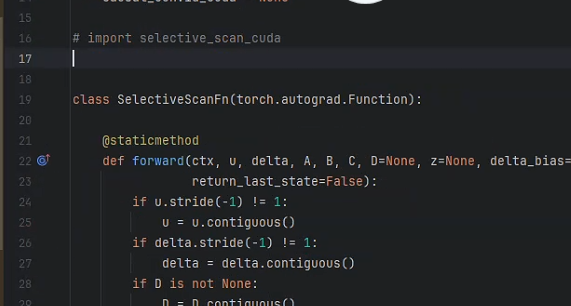

conda activate umamba - 将pycharm的环境切换到虚拟环境,一会有用

点击添加新的解释器->添加本地解释器->选择conda->现有环境->umamba

- 安装pytorch

pip install torch==2.0.1 torchvision==0.15.2 --index-url https://download.pytorch.org/whl/cu118

- 安装causal-conv1d和mabma-ssm(坑来了) 点击本处从github下载符合要求的causal-conv1d和mabma-ssm, 将下载后的两个whl文件放到项目根目录下,并在pycharm中终端执行

pip install causal_conv1d-1.2.0.post1+cu118torch2.0cxx11abiTRUE-cp310-cp310-linux_x86_64.whl -i https://pypi.tuna.tsinghua.edu.cn/simplepip install mamba_ssm-1.2.0.post1+cu118torch2.0cxx11abiTRUE-cp310-cp310-linux_x86_64.whl -i https://pypi.tuna.tsinghua.edu.cn/simple

- 安装umabma

cd umamba/

pip install -e . -i https://pypi.tuna.tsinghua.edu.cn/simple数据集配置

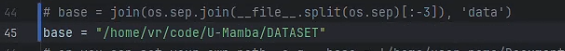

在项目目录下创建DATASET文件夹,并右键复制绝对路径,进入umamba->nnunetv2->paths.py,修改base为复制的路径

在DATASET下新建nnUNet_results nnUNet_raw nnUNet_results

数据集的制作请看nnunetv2不详细说明

排坑

selective_scan_cuda

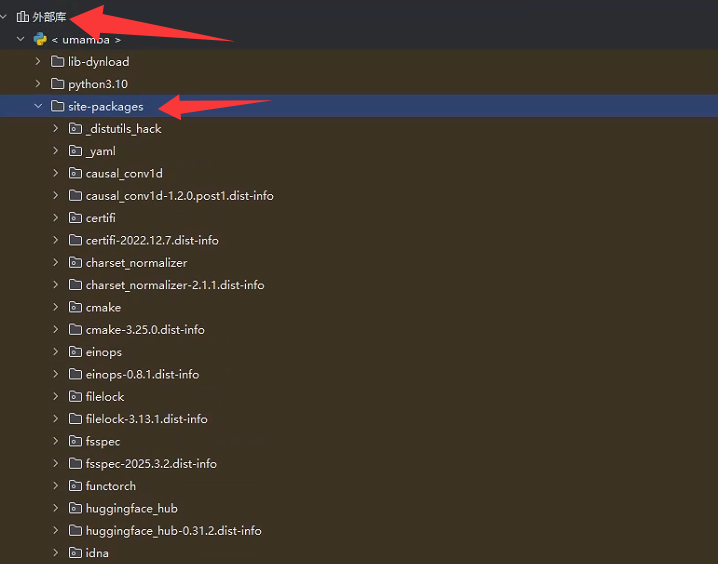

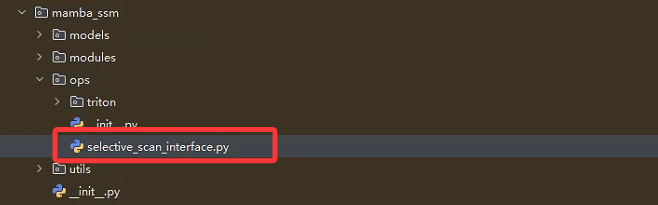

点击外部库->site-packages->mabma_ssm->ops0->selective_scan_interface.py

注释import selective_scan_cuda

将82,305行左右

def selective_scan_fn(u, delta, A, B, C, D=None, z=None, delta_bias=None, delta_softplus=False,

return_last_state=False):

"""if return_last_state is True, returns (out, last_state)

last_state has shape (batch, dim, dstate). Note that the gradient of the last state is

not considered in the backward pass.

"""

return SelectiveScanFn.apply(u, delta, A, B, C, D, z, delta_bias, delta_softplus, return_last_state)

def mamba_inner_fn(

xz, conv1d_weight, conv1d_bias, x_proj_weight, delta_proj_weight,

out_proj_weight, out_proj_bias,

A, B=None, C=None, D=None, delta_bias=None, B_proj_bias=None,

C_proj_bias=None, delta_softplus=True

):

return MambaInnerFn.apply(xz, conv1d_weight, conv1d_bias, x_proj_weight, delta_proj_weight,

out_proj_weight, out_proj_bias,

A, B, C, D, delta_bias, B_proj_bias, C_proj_bias, delta_softplus)分别修改为

def selective_scan_fn(u, delta, A, B, C, D=None, z=None, delta_bias=None, delta_softplus=False,

return_last_state=False): """if return_last_state is True, returns (out, last_state) last_state has shape (batch, dim, dstate). Note that the gradient of the last state is not considered in the backward pass. """

return selective_scan_ref(u, delta, A, B, C, D, z, delta_bias, delta_softplus, return_last_state)

def mamba_inner_fn(

xz, conv1d_weight, conv1d_bias, x_proj_weight, delta_proj_weight, out_proj_weight, out_proj_bias, A, B=None, C=None, D=None, delta_bias=None, B_proj_bias=None, C_proj_bias=None, delta_softplus=True):

return mamba_inner_ref(xz, conv1d_weight, conv1d_bias, x_proj_weight, delta_proj_weight, out_proj_weight, out_proj_bias, A, B, C, D, delta_bias, B_proj_bias, C_proj_bias, delta_softplus) numpy

pip uninstall numpy

pip install numpy==1.26.3 -i https://pypi.tuna.tsinghua.edu.cn/simple数据集验证

nnUNetv2_plan_and_preprocess -d DATASET_ID --verify_dataset_integrity启动训练

nnUNet_n_proc_DA=0 CUDA_VISIBLE_DEVICES=0 nnUNetv2_train 1 3d_fullres all -tr nnUNetTrainerUMambaBot