本文最后更新于267 天前,其中的信息可能已经过时,如有错误请发送邮件到3088506834@qq.com

序

其实就是人工智能导论课要求的部分,只不过本处只讲述对应参数部分修改以及相关本地环境部署

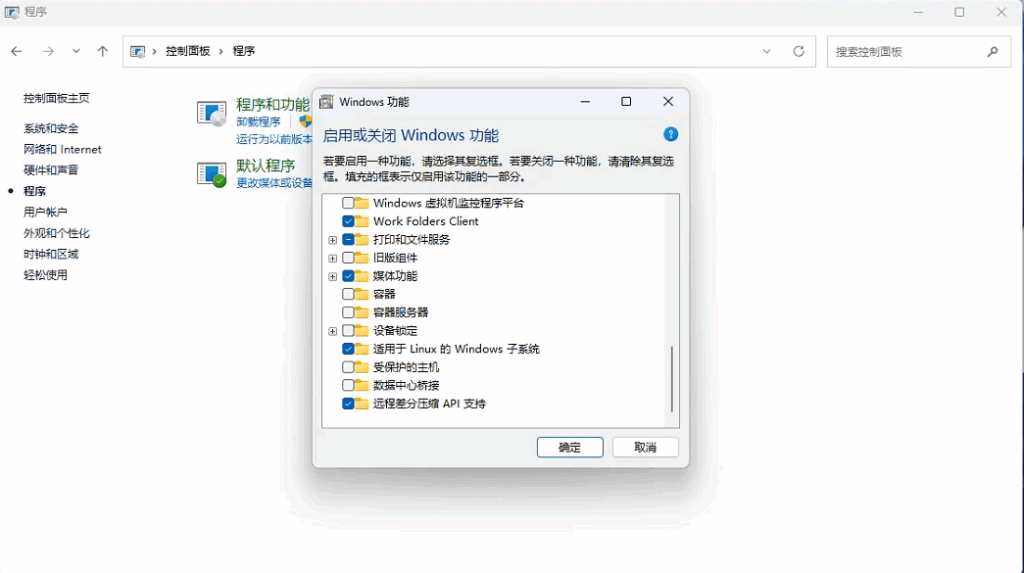

环境部署

配置前吐槽一波: 什么远古环境(paddlepaddle 1.8,甚至1.8.5都跑不动,配了cuda10和cudnn7也跑不起来),建议别为了涂快来配gpu环境(避雷,一堆问题),就用cpu安安稳稳跑吧。

输入以下命令按照paddlepaddle

python -m pip install paddlepaddle==1.8.0.post107 -f https://paddlepaddle.org.cn/whl/stable.html接下来安装matplotlib

pip install matplotlib如果报错ModuleNotFoundError: No module named ‘nltk’则运行以下命令(别问为什么,因为我遇到了)

pip install -U nltk 建议步骤: (建议使用jupyter,也就是paddlepaddle官网的notebook,需要pycharm专业版)

pip install jupyter源代码

paddlepaddle的示例代码

# 模型的定义以及训练,并在训练过程中展示模型生成的效果

%matplotlib inline

#让matplotlib的输出图像能够直接在notebook上显示

import paddle

import paddle.fluid as fluid

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.gridspec as gridspec

import os

#batch 的大小

mb_size = 32

#随机变量长度

Z_dim = 16

#生成随机变量

def sample_Z(m, n):

return np.random.uniform(-1., 1., size=[m, n])

def sample_c(m):

return np.random.multinomial(1, 10*[0.1], size=m)

#生成器模型

#由于aistudio环境限制,使用两层FC网络

def generator(inputs):

G_h1 = fluid.layers.fc(input = inputs,

size = 256,

act = "relu",

param_attr = fluid.ParamAttr(name="GW1",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Gb1",

initializer = fluid.initializer.Constant()))

G_prob = fluid.layers.fc(input = G_h1,

size = 784,

act = "sigmoid",

param_attr = fluid.ParamAttr(name="GW2",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Gb2",

initializer = fluid.initializer.Constant()))

return G_prob

#判别器模型

def discriminator(x):

D_h1 = fluid.layers.fc(input = x,

size = 128,

act = "relu",

param_attr = fluid.ParamAttr(name="DW1",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Db1",

initializer = fluid.initializer.Constant()))

D_logit = fluid.layers.fc(input = D_h1,

size = 1,

act = "sigmoid",

param_attr = fluid.ParamAttr(name="DW2",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Db2",

initializer = fluid.initializer.Constant()))

return D_logit

#c的概率近似(对于输入X)

def Q(x):

Q_h1 = fluid.layers.fc(input = x,

size = 128,

act = "relu",

param_attr = fluid.ParamAttr(name="QW1",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Qb1",

initializer = fluid.initializer.Constant()))

Q_prob = fluid.layers.fc(input = Q_h1,

size = 10,

act = "softmax",

param_attr = fluid.ParamAttr(name="QW2",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Qb2",

initializer = fluid.initializer.Constant()))

return Q_prob

#G优化程序

G_program = fluid.Program()

with fluid.program_guard(G_program, fluid.default_startup_program()):

Z = fluid.layers.data(name='Z', shape=[Z_dim], dtype='float32')

c = fluid.layers.data(name='c', shape=[10], dtype='float32')

#合并输入

inputs = fluid.layers.concat(input=[Z, c], axis = 1)

G_sample = generator(inputs)

D_fake = discriminator(G_sample)

G_loss = 0.0 - fluid.layers.reduce_mean(fluid.layers.log(D_fake + 1e-8))

theta_G = ["GW1", "Gb1", "GW2", "Gb2"]

G_optimizer = fluid.optimizer.AdamOptimizer()

G_optimizer.minimize(G_loss, parameter_list=theta_G)

#D优化程序

D_program = fluid.Program()

with fluid.program_guard(D_program, fluid.default_startup_program()):

Z = fluid.layers.data(name='Z', shape=[Z_dim], dtype='float32')

c = fluid.layers.data(name='c', shape=[10], dtype='float32')

X = fluid.layers.data(name='X', shape=[784], dtype='float32')

X = X * 0.5 + 0.5

inputs = fluid.layers.concat(input=[Z, c], axis = 1)

G_sample = generator(inputs)

D_real = discriminator(X)

D_fake = discriminator(G_sample)

D_loss = 0.0 - fluid.layers.reduce_mean(fluid.layers.log(D_real + 1e-8)

+ fluid.layers.log(1.0 - D_fake + 1e-8))

theta_D = ["DW1", "Db1", "DW2", "Db2"]

D_optimizer = fluid.optimizer.AdamOptimizer()

D_optimizer.minimize(D_loss, parameter_list=theta_D)

#Q优化程序

Q_program = fluid.Program()

with fluid.program_guard(Q_program, fluid.default_startup_program()):

Z = fluid.layers.data(name='Z', shape=[Z_dim], dtype='float32')

c = fluid.layers.data(name='c', shape=[10], dtype='float32')

inputs = fluid.layers.concat(input=[Z, c], axis = 1)

G_sample = generator(inputs)

Q_c_given_x = Q(G_sample)

#最小化熵

Q_loss = fluid.layers.reduce_mean(

0.0 - fluid.layers.reduce_sum(

fluid.layers.elementwise_mul(fluid.layers.log(Q_c_given_x + 1e-8), c), 1))

theta_Q = ["GW1", "Gb1", "GW2", "Gb2",

"QW1", "Qb1", "QW2", "Qb2"]

Q_optimizer = fluid.optimizer.AdamOptimizer()

Q_optimizer.minimize(Q_loss, parameter_list = theta_Q)

#Inference

Infer_program = fluid.Program()

with fluid.program_guard(Infer_program, fluid.default_startup_program()):

Z = fluid.layers.data(name='Z', shape=[Z_dim], dtype='float32')

c = fluid.layers.data(name='c', shape=[10], dtype='float32')

inputs = fluid.layers.concat(input=[Z, c], axis = 1)

G_sample = generator(inputs)

#读入数据,只载入训练集

paddle.dataset.common.DATA_HOME = './data/data65' #更改下载路径,使用提前保存好的MNIST数据集

train_reader = paddle.batch(

paddle.reader.shuffle(

paddle.dataset.mnist.train(), buf_size=500), #paddle.dataset.mnist.train()

batch_size=mb_size)

#Executor

exe = fluid.Executor(fluid.CPUPlace()) #CUDAPlace(0)

exe.run(program=fluid.default_startup_program())

it = 0

for _ in range(33):

for data in train_reader():

it += 1

#获取训练集图像

X_mb = [data[i][0] for i in range(mb_size)]

#生成噪声

Z_noise = sample_Z(mb_size, Z_dim)

c_noise = sample_c(mb_size)

feeding_withx= {"X" : np.array(X_mb).astype('float32'),

"Z" : np.array(Z_noise).astype('float32'),

"c" : np.array(c_noise).astype('float32')}

feeding = {"Z" : np.array(Z_noise).astype('float32'),

"c" : np.array(c_noise).astype('float32')}

#三层优化

D_loss_curr = exe.run(feed = feeding_withx, program = D_program, fetch_list = [D_loss])

G_loss_curr = exe.run(feed = feeding, program = G_program, fetch_list = [G_loss])

Q_loss_curr = exe.run(feed = feeding, program = Q_program, fetch_list = [Q_loss])

if it % 1000 == 0:

print(str(it) + ' | '

+ str (D_loss_curr[0][0]) + ' | '

+ str (G_loss_curr[0][0]) + ' | '

+ str (Q_loss_curr[0][0]))

if it % 10000 == 0:

#显示模型生成结果

Z_noise_ = sample_Z(mb_size, Z_dim)

idx1 = np.random.randint(0, 10)

idx2 = np.random.randint(0, 10)

idx3 = np.random.randint(0, 10)

idx4 = np.random.randint(0, 10)

c_noise_ = np.zeros([mb_size, 10])

c_noise_[range(8), idx1] = 1.0

c_noise_[range(8, 16), idx2] = 1.0

c_noise_[range(16, 24), idx3] = 1.0

c_noise_[range(24, 32), idx4] = 1.0

feeding_ = {"Z" : np.array(Z_noise_).astype('float32'),

"c" : np.array(c_noise_).astype('float32')}

samples = exe.run(feed = feeding_,

program = Infer_program,

fetch_list = [G_sample])

# 保存固化后用于infer的模型,方便后续使用

fluid.io.save_inference_model(dirname='freeze_model', executor=exe, feeded_var_names=['Z', 'c'], target_vars=[G_sample],main_program=Infer_program)

for i in range(32):

ax = plt.subplot(4, 8, 1 + i)

plt.axis('off')

ax.set_xticklabels([])

ax.set_yticklabels([])

ax.set_aspect('equal')

plt.imshow(np.reshape(samples[0][i], [28,28]), cmap='Greys_r')

plt.show()batch_size = 8修改后代码

# 模型的定义以及训练,并在训练过程中展示模型生成的效果

%matplotlib inline

#让matplotlib的输出图像能够直接在notebook上显示

import paddle

import paddle.fluid as fluid

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.gridspec as gridspec

import os

#batch 的大小

mb_size = 8

#随机变量长度

Z_dim = 16

#生成随机变量

def sample_Z(m, n):

return np.random.uniform(-1., 1., size=[m, n])

def sample_c(m):

return np.random.multinomial(1, 10*[0.1], size=m)

#生成器模型

#由于aistudio环境限制,使用两层FC网络

def generator(inputs):

G_h1 = fluid.layers.fc(input = inputs,

size = 256,

act = "relu",

param_attr = fluid.ParamAttr(name="GW1",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Gb1",

initializer = fluid.initializer.Constant()))

G_prob = fluid.layers.fc(input = G_h1,

size = 784,

act = "sigmoid",

param_attr = fluid.ParamAttr(name="GW2",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Gb2",

initializer = fluid.initializer.Constant()))

return G_prob

#判别器模型

def discriminator(x):

D_h1 = fluid.layers.fc(input = x,

size = 128,

act = "relu",

param_attr = fluid.ParamAttr(name="DW1",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Db1",

initializer = fluid.initializer.Constant()))

D_logit = fluid.layers.fc(input = D_h1,

size = 1,

act = "sigmoid",

param_attr = fluid.ParamAttr(name="DW2",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Db2",

initializer = fluid.initializer.Constant()))

return D_logit

#c的概率近似(对于输入X)

def Q(x):

Q_h1 = fluid.layers.fc(input = x,

size = 128,

act = "relu",

param_attr = fluid.ParamAttr(name="QW1",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Qb1",

initializer = fluid.initializer.Constant()))

Q_prob = fluid.layers.fc(input = Q_h1,

size = 10,

act = "softmax",

param_attr = fluid.ParamAttr(name="QW2",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Qb2",

initializer = fluid.initializer.Constant()))

return Q_prob

#G优化程序

G_program = fluid.Program()

with fluid.program_guard(G_program, fluid.default_startup_program()):

Z = fluid.layers.data(name='Z', shape=[Z_dim], dtype='float32')

c = fluid.layers.data(name='c', shape=[10], dtype='float32')

#合并输入

inputs = fluid.layers.concat(input=[Z, c], axis = 1)

G_sample = generator(inputs)

D_fake = discriminator(G_sample)

G_loss = 0.0 - fluid.layers.reduce_mean(fluid.layers.log(D_fake + 1e-8))

theta_G = ["GW1", "Gb1", "GW2", "Gb2"]

G_optimizer = fluid.optimizer.AdamOptimizer()

G_optimizer.minimize(G_loss, parameter_list=theta_G)

#D优化程序

D_program = fluid.Program()

with fluid.program_guard(D_program, fluid.default_startup_program()):

Z = fluid.layers.data(name='Z', shape=[Z_dim], dtype='float32')

c = fluid.layers.data(name='c', shape=[10], dtype='float32')

X = fluid.layers.data(name='X', shape=[784], dtype='float32')

X = X * 0.5 + 0.5

inputs = fluid.layers.concat(input=[Z, c], axis = 1)

G_sample = generator(inputs)

D_real = discriminator(X)

D_fake = discriminator(G_sample)

D_loss = 0.0 - fluid.layers.reduce_mean(fluid.layers.log(D_real + 1e-8)

+ fluid.layers.log(1.0 - D_fake + 1e-8))

theta_D = ["DW1", "Db1", "DW2", "Db2"]

D_optimizer = fluid.optimizer.AdamOptimizer()

D_optimizer.minimize(D_loss, parameter_list=theta_D)

#Q优化程序

Q_program = fluid.Program()

with fluid.program_guard(Q_program, fluid.default_startup_program()):

Z = fluid.layers.data(name='Z', shape=[Z_dim], dtype='float32')

c = fluid.layers.data(name='c', shape=[10], dtype='float32')

inputs = fluid.layers.concat(input=[Z, c], axis = 1)

G_sample = generator(inputs)

Q_c_given_x = Q(G_sample)

#最小化熵

Q_loss = fluid.layers.reduce_mean(

0.0 - fluid.layers.reduce_sum(

fluid.layers.elementwise_mul(fluid.layers.log(Q_c_given_x + 1e-8), c), 1))

theta_Q = ["GW1", "Gb1", "GW2", "Gb2",

"QW1", "Qb1", "QW2", "Qb2"]

Q_optimizer = fluid.optimizer.AdamOptimizer()

Q_optimizer.minimize(Q_loss, parameter_list = theta_Q)

#Inference

Infer_program = fluid.Program()

with fluid.program_guard(Infer_program, fluid.default_startup_program()):

Z = fluid.layers.data(name='Z', shape=[Z_dim], dtype='float32')

c = fluid.layers.data(name='c', shape=[10], dtype='float32')

inputs = fluid.layers.concat(input=[Z, c], axis = 1)

G_sample = generator(inputs)

#读入数据,只载入训练集

paddle.dataset.common.DATA_HOME = './data/data65' #更改下载路径,使用提前保存好的MNIST数据集

train_reader = paddle.batch(

paddle.reader.shuffle(

paddle.dataset.mnist.train(), buf_size=500), #paddle.dataset.mnist.train()

batch_size=mb_size)

#Executor

exe = fluid.Executor(fluid.CPUPlace()) #CUDAPlace(0)

exe.run(program=fluid.default_startup_program())

it = 0

for _ in range(33):

for data in train_reader():

it += 1

#获取训练集图像

X_mb = [data[i][0] for i in range(mb_size)]

#生成噪声

Z_noise = sample_Z(mb_size, Z_dim)

c_noise = sample_c(mb_size)

feeding_withx= {"X" : np.array(X_mb).astype('float32'),

"Z" : np.array(Z_noise).astype('float32'),

"c" : np.array(c_noise).astype('float32')}

feeding = {"Z" : np.array(Z_noise).astype('float32'),

"c" : np.array(c_noise).astype('float32')}

#三层优化

D_loss_curr = exe.run(feed = feeding_withx, program = D_program, fetch_list = [D_loss])

G_loss_curr = exe.run(feed = feeding, program = G_program, fetch_list = [G_loss])

Q_loss_curr = exe.run(feed = feeding, program = Q_program, fetch_list = [Q_loss])

if it % 1000 == 0:

print(str(it) + ' | '

+ str (D_loss_curr[0][0]) + ' | '

+ str (G_loss_curr[0][0]) + ' | '

+ str (Q_loss_curr[0][0]))

if it % 10000 == 0:

#显示模型生成结果

Z_noise_ = sample_Z(mb_size, Z_dim)

idx1 = np.random.randint(0, 10)

c_noise_ = np.zeros([mb_size, 10])

c_noise_[range(8), idx1] = 1.0

feeding_ = {"Z" : np.array(Z_noise_).astype('float32'),

"c" : np.array(c_noise_).astype('float32')}

samples = exe.run(feed = feeding_,

program = Infer_program,

fetch_list = [G_sample])

# 保存固化后用于infer的模型,方便后续使用

fluid.io.save_inference_model(dirname='freeze_model_batch_size_8', executor=exe, feeded_var_names=['Z', 'c'], target_vars=[G_sample],main_program=Infer_program)

for i in range(8):

ax = plt.subplot(2, 4, 1 + i)

plt.axis('off')

ax.set_xticklabels([])

ax.set_yticklabels([])

ax.set_aspect('equal')

plt.imshow(np.reshape(samples[0][i], [28,28]), cmap='Greys_r')

plt.show()batch_size = 40修改后代码

# 模型的定义以及训练,并在训练过程中展示模型生成的效果

%matplotlib inline

#让matplotlib的输出图像能够直接在notebook上显示

import paddle

import paddle.fluid as fluid

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.gridspec as gridspec

import os

#batch 的大小

mb_size = 40

#随机变量长度

Z_dim = 16

#生成随机变量

def sample_Z(m, n):

return np.random.uniform(-1., 1., size=[m, n])

def sample_c(m):

return np.random.multinomial(1, 10*[0.1], size=m)

#生成器模型

#由于aistudio环境限制,使用两层FC网络

def generator(inputs):

G_h1 = fluid.layers.fc(input = inputs,

size = 256,

act = "relu",

param_attr = fluid.ParamAttr(name="GW1",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Gb1",

initializer = fluid.initializer.Constant()))

G_prob = fluid.layers.fc(input = G_h1,

size = 784,

act = "sigmoid",

param_attr = fluid.ParamAttr(name="GW2",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Gb2",

initializer = fluid.initializer.Constant()))

return G_prob

#判别器模型

def discriminator(x):

D_h1 = fluid.layers.fc(input = x,

size = 128,

act = "relu",

param_attr = fluid.ParamAttr(name="DW1",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Db1",

initializer = fluid.initializer.Constant()))

D_logit = fluid.layers.fc(input = D_h1,

size = 1,

act = "sigmoid",

param_attr = fluid.ParamAttr(name="DW2",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Db2",

initializer = fluid.initializer.Constant()))

return D_logit

#c的概率近似(对于输入X)

def Q(x):

Q_h1 = fluid.layers.fc(input = x,

size = 128,

act = "relu",

param_attr = fluid.ParamAttr(name="QW1",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Qb1",

initializer = fluid.initializer.Constant()))

Q_prob = fluid.layers.fc(input = Q_h1,

size = 10,

act = "softmax",

param_attr = fluid.ParamAttr(name="QW2",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Qb2",

initializer = fluid.initializer.Constant()))

return Q_prob

#G优化程序

G_program = fluid.Program()

with fluid.program_guard(G_program, fluid.default_startup_program()):

Z = fluid.layers.data(name='Z', shape=[Z_dim], dtype='float32')

c = fluid.layers.data(name='c', shape=[10], dtype='float32')

#合并输入

inputs = fluid.layers.concat(input=[Z, c], axis = 1)

G_sample = generator(inputs)

D_fake = discriminator(G_sample)

G_loss = 0.0 - fluid.layers.reduce_mean(fluid.layers.log(D_fake + 1e-8))

theta_G = ["GW1", "Gb1", "GW2", "Gb2"]

G_optimizer = fluid.optimizer.AdamOptimizer()

G_optimizer.minimize(G_loss, parameter_list=theta_G)

#D优化程序

D_program = fluid.Program()

with fluid.program_guard(D_program, fluid.default_startup_program()):

Z = fluid.layers.data(name='Z', shape=[Z_dim], dtype='float32')

c = fluid.layers.data(name='c', shape=[10], dtype='float32')

X = fluid.layers.data(name='X', shape=[784], dtype='float32')

X = X * 0.5 + 0.5

inputs = fluid.layers.concat(input=[Z, c], axis = 1)

G_sample = generator(inputs)

D_real = discriminator(X)

D_fake = discriminator(G_sample)

D_loss = 0.0 - fluid.layers.reduce_mean(fluid.layers.log(D_real + 1e-8)

+ fluid.layers.log(1.0 - D_fake + 1e-8))

theta_D = ["DW1", "Db1", "DW2", "Db2"]

D_optimizer = fluid.optimizer.AdamOptimizer()

D_optimizer.minimize(D_loss, parameter_list=theta_D)

#Q优化程序

Q_program = fluid.Program()

with fluid.program_guard(Q_program, fluid.default_startup_program()):

Z = fluid.layers.data(name='Z', shape=[Z_dim], dtype='float32')

c = fluid.layers.data(name='c', shape=[10], dtype='float32')

inputs = fluid.layers.concat(input=[Z, c], axis = 1)

G_sample = generator(inputs)

Q_c_given_x = Q(G_sample)

#最小化熵

Q_loss = fluid.layers.reduce_mean(

0.0 - fluid.layers.reduce_sum(

fluid.layers.elementwise_mul(fluid.layers.log(Q_c_given_x + 1e-8), c), 1))

theta_Q = ["GW1", "Gb1", "GW2", "Gb2",

"QW1", "Qb1", "QW2", "Qb2"]

Q_optimizer = fluid.optimizer.AdamOptimizer()

Q_optimizer.minimize(Q_loss, parameter_list = theta_Q)

#Inference

Infer_program = fluid.Program()

with fluid.program_guard(Infer_program, fluid.default_startup_program()):

Z = fluid.layers.data(name='Z', shape=[Z_dim], dtype='float32')

c = fluid.layers.data(name='c', shape=[10], dtype='float32')

inputs = fluid.layers.concat(input=[Z, c], axis = 1)

G_sample = generator(inputs)

#读入数据,只载入训练集

paddle.dataset.common.DATA_HOME = './data/data65' #更改下载路径,使用提前保存好的MNIST数据集

train_reader = paddle.batch(

paddle.reader.shuffle(

paddle.dataset.mnist.train(), buf_size=500), #paddle.dataset.mnist.train()

batch_size=mb_size)

#Executor

exe = fluid.Executor(fluid.CPUPlace()) #CUDAPlace(0)

exe.run(program=fluid.default_startup_program())

it = 0

for _ in range(33):

for data in train_reader():

it += 1

#获取训练集图像

X_mb = [data[i][0] for i in range(mb_size)]

#生成噪声

Z_noise = sample_Z(mb_size, Z_dim)

c_noise = sample_c(mb_size)

feeding_withx= {"X" : np.array(X_mb).astype('float32'),

"Z" : np.array(Z_noise).astype('float32'),

"c" : np.array(c_noise).astype('float32')}

feeding = {"Z" : np.array(Z_noise).astype('float32'),

"c" : np.array(c_noise).astype('float32')}

#三层优化

D_loss_curr = exe.run(feed = feeding_withx, program = D_program, fetch_list = [D_loss])

G_loss_curr = exe.run(feed = feeding, program = G_program, fetch_list = [G_loss])

Q_loss_curr = exe.run(feed = feeding, program = Q_program, fetch_list = [Q_loss])

if it % 1000 == 0:

print(str(it) + ' | '

+ str (D_loss_curr[0][0]) + ' | '

+ str (G_loss_curr[0][0]) + ' | '

+ str (Q_loss_curr[0][0]))

if it % 10000 == 0:

#显示模型生成结果

Z_noise_ = sample_Z(mb_size, Z_dim)

idx1 = np.random.randint(0, 10)

idx2 = np.random.randint(0, 10)

idx3 = np.random.randint(0, 10)

idx4 = np.random.randint(0, 10)

idx5 = np.random.randint(0, 10)

c_noise_ = np.zeros([mb_size, 10])

c_noise_[range(8), idx1] = 1.0

c_noise_[range(8, 16), idx2] = 1.0

c_noise_[range(16, 24), idx3] = 1.0

c_noise_[range(24, 32), idx4] = 1.0

c_noise_[range(32, 40), idx5] = 1.0

feeding_ = {"Z" : np.array(Z_noise_).astype('float32'),

"c" : np.array(c_noise_).astype('float32')}

samples = exe.run(feed = feeding_,

program = Infer_program,

fetch_list = [G_sample])

# 保存固化后用于infer的模型,方便后续使用

fluid.io.save_inference_model(dirname='freeze_model_batch_size_40', executor=exe, feeded_var_names=['Z', 'c'], target_vars=[G_sample],main_program=Infer_program)

for i in range(40):

ax = plt.subplot(4, 10, 1 + i)

plt.axis('off')

ax.set_xticklabels([])

ax.set_yticklabels([])

ax.set_aspect('equal')

plt.imshow(np.reshape(samples[0][i], [28,28]), cmap='Greys_r')

plt.show()参数调优SGD

# 模型的定义以及训练,并在训练过程中展示模型生成的效果

%matplotlib inline

#让matplotlib的输出图像能够直接在notebook上显示

import paddle

import paddle.fluid as fluid

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.gridspec as gridspec

import os

#batch 的大小

mb_size = 32

#随机变量长度

Z_dim = 16

#生成随机变量

def sample_Z(m, n):

return np.random.uniform(-1., 1., size=[m, n])

def sample_c(m):

return np.random.multinomial(1, 10*[0.1], size=m)

#生成器模型

#由于aistudio环境限制,使用两层FC网络

def generator(inputs):

G_h1 = fluid.layers.fc(input = inputs,

size = 256,

act = "relu",

param_attr = fluid.ParamAttr(name="GW1",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Gb1",

initializer = fluid.initializer.Constant()))

G_prob = fluid.layers.fc(input = G_h1,

size = 784,

act = "sigmoid",

param_attr = fluid.ParamAttr(name="GW2",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Gb2",

initializer = fluid.initializer.Constant()))

return G_prob

#判别器模型

def discriminator(x):

D_h1 = fluid.layers.fc(input = x,

size = 128,

act = "relu",

param_attr = fluid.ParamAttr(name="DW1",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Db1",

initializer = fluid.initializer.Constant()))

D_logit = fluid.layers.fc(input = D_h1,

size = 1,

act = "sigmoid",

param_attr = fluid.ParamAttr(name="DW2",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Db2",

initializer = fluid.initializer.Constant()))

return D_logit

#c的概率近似(对于输入X)

def Q(x):

Q_h1 = fluid.layers.fc(input = x,

size = 128,

act = "relu",

param_attr = fluid.ParamAttr(name="QW1",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Qb1",

initializer = fluid.initializer.Constant()))

Q_prob = fluid.layers.fc(input = Q_h1,

size = 10,

act = "softmax",

param_attr = fluid.ParamAttr(name="QW2",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Qb2",

initializer = fluid.initializer.Constant()))

return Q_prob

#G优化程序

G_program = fluid.Program()

with fluid.program_guard(G_program, fluid.default_startup_program()):

Z = fluid.layers.data(name='Z', shape=[Z_dim], dtype='float32')

c = fluid.layers.data(name='c', shape=[10], dtype='float32')

#合并输入

inputs = fluid.layers.concat(input=[Z, c], axis = 1)

G_sample = generator(inputs)

D_fake = discriminator(G_sample)

G_loss = 0.0 - fluid.layers.reduce_mean(fluid.layers.log(D_fake + 1e-8))

theta_G = ["GW1", "Gb1", "GW2", "Gb2"]

G_optimizer = fluid.optimizer.SGDOptimizer(learning_rate=0.001)

G_optimizer.minimize(G_loss, parameter_list=theta_G)

#D优化程序

D_program = fluid.Program()

with fluid.program_guard(D_program, fluid.default_startup_program()):

Z = fluid.layers.data(name='Z', shape=[Z_dim], dtype='float32')

c = fluid.layers.data(name='c', shape=[10], dtype='float32')

X = fluid.layers.data(name='X', shape=[784], dtype='float32')

X = X * 0.5 + 0.5

inputs = fluid.layers.concat(input=[Z, c], axis = 1)

G_sample = generator(inputs)

D_real = discriminator(X)

D_fake = discriminator(G_sample)

D_loss = 0.0 - fluid.layers.reduce_mean(fluid.layers.log(D_real + 1e-8)

+ fluid.layers.log(1.0 - D_fake + 1e-8))

theta_D = ["DW1", "Db1", "DW2", "Db2"]

D_optimizer = fluid.optimizer.AdamOptimizer()

D_optimizer.minimize(D_loss, parameter_list=theta_D)

#Q优化程序

Q_program = fluid.Program()

with fluid.program_guard(Q_program, fluid.default_startup_program()):

Z = fluid.layers.data(name='Z', shape=[Z_dim], dtype='float32')

c = fluid.layers.data(name='c', shape=[10], dtype='float32')

inputs = fluid.layers.concat(input=[Z, c], axis = 1)

G_sample = generator(inputs)

Q_c_given_x = Q(G_sample)

#最小化熵

Q_loss = fluid.layers.reduce_mean(

0.0 - fluid.layers.reduce_sum(

fluid.layers.elementwise_mul(fluid.layers.log(Q_c_given_x + 1e-8), c), 1))

theta_Q = ["GW1", "Gb1", "GW2", "Gb2",

"QW1", "Qb1", "QW2", "Qb2"]

Q_optimizer = fluid.optimizer.AdamOptimizer()

Q_optimizer.minimize(Q_loss, parameter_list = theta_Q)

#Inference

Infer_program = fluid.Program()

with fluid.program_guard(Infer_program, fluid.default_startup_program()):

Z = fluid.layers.data(name='Z', shape=[Z_dim], dtype='float32')

c = fluid.layers.data(name='c', shape=[10], dtype='float32')

inputs = fluid.layers.concat(input=[Z, c], axis = 1)

G_sample = generator(inputs)

#读入数据,只载入训练集

paddle.dataset.common.DATA_HOME = './data/data65' #更改下载路径,使用提前保存好的MNIST数据集

train_reader = paddle.batch(

paddle.reader.shuffle(

paddle.dataset.mnist.train(), buf_size=500), #paddle.dataset.mnist.train()

batch_size=mb_size)

#Executor

exe = fluid.Executor(fluid.CPUPlace()) #CUDAPlace(0)

exe.run(program=fluid.default_startup_program())

it = 0

for _ in range(33):

for data in train_reader():

it += 1

#获取训练集图像

X_mb = [data[i][0] for i in range(mb_size)]

#生成噪声

Z_noise = sample_Z(mb_size, Z_dim)

c_noise = sample_c(mb_size)

feeding_withx= {"X" : np.array(X_mb).astype('float32'),

"Z" : np.array(Z_noise).astype('float32'),

"c" : np.array(c_noise).astype('float32')}

feeding = {"Z" : np.array(Z_noise).astype('float32'),

"c" : np.array(c_noise).astype('float32')}

#三层优化

D_loss_curr = exe.run(feed = feeding_withx, program = D_program, fetch_list = [D_loss])

G_loss_curr = exe.run(feed = feeding, program = G_program, fetch_list = [G_loss])

Q_loss_curr = exe.run(feed = feeding, program = Q_program, fetch_list = [Q_loss])

if it % 1000 == 0:

print(str(it) + ' | '

+ str (D_loss_curr[0][0]) + ' | '

+ str (G_loss_curr[0][0]) + ' | '

+ str (Q_loss_curr[0][0]))

if it % 10000 == 0:

#显示模型生成结果

Z_noise_ = sample_Z(mb_size, Z_dim)

idx1 = np.random.randint(0, 10)

idx2 = np.random.randint(0, 10)

idx3 = np.random.randint(0, 10)

idx4 = np.random.randint(0, 10)

c_noise_ = np.zeros([mb_size, 10])

c_noise_[range(8), idx1] = 1.0

c_noise_[range(8, 16), idx2] = 1.0

c_noise_[range(16, 24), idx3] = 1.0

c_noise_[range(24, 32), idx4] = 1.0

feeding_ = {"Z" : np.array(Z_noise_).astype('float32'),

"c" : np.array(c_noise_).astype('float32')}

samples = exe.run(feed = feeding_,

program = Infer_program,

fetch_list = [G_sample])

# 保存固化后用于infer的模型,方便后续使用

fluid.io.save_inference_model(dirname='freeze_model_sgd', executor=exe, feeded_var_names=['Z', 'c'], target_vars=[G_sample],main_program=Infer_program)

for i in range(32):

ax = plt.subplot(4, 8, 1 + i)

plt.axis('off')

ax.set_xticklabels([])

ax.set_yticklabels([])

ax.set_aspect('equal')

plt.imshow(np.reshape(samples[0][i], [28,28]), cmap='Greys_r')

plt.show()参数调优Momentum

# 模型的定义以及训练,并在训练过程中展示模型生成的效果

%matplotlib inline

#让matplotlib的输出图像能够直接在notebook上显示

import paddle

import paddle.fluid as fluid

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.gridspec as gridspec

import os

#batch 的大小

mb_size = 32

#随机变量长度

Z_dim = 16

#生成随机变量

def sample_Z(m, n):

return np.random.uniform(-1., 1., size=[m, n])

def sample_c(m):

return np.random.multinomial(1, 10*[0.1], size=m)

#生成器模型

#由于aistudio环境限制,使用两层FC网络

def generator(inputs):

G_h1 = fluid.layers.fc(input = inputs,

size = 256,

act = "relu",

param_attr = fluid.ParamAttr(name="GW1",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Gb1",

initializer = fluid.initializer.Constant()))

G_prob = fluid.layers.fc(input = G_h1,

size = 784,

act = "sigmoid",

param_attr = fluid.ParamAttr(name="GW2",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Gb2",

initializer = fluid.initializer.Constant()))

return G_prob

#判别器模型

def discriminator(x):

D_h1 = fluid.layers.fc(input = x,

size = 128,

act = "relu",

param_attr = fluid.ParamAttr(name="DW1",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Db1",

initializer = fluid.initializer.Constant()))

D_logit = fluid.layers.fc(input = D_h1,

size = 1,

act = "sigmoid",

param_attr = fluid.ParamAttr(name="DW2",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Db2",

initializer = fluid.initializer.Constant()))

return D_logit

#c的概率近似(对于输入X)

def Q(x):

Q_h1 = fluid.layers.fc(input = x,

size = 128,

act = "relu",

param_attr = fluid.ParamAttr(name="QW1",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Qb1",

initializer = fluid.initializer.Constant()))

Q_prob = fluid.layers.fc(input = Q_h1,

size = 10,

act = "softmax",

param_attr = fluid.ParamAttr(name="QW2",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Qb2",

initializer = fluid.initializer.Constant()))

return Q_prob

#G优化程序

G_program = fluid.Program()

with fluid.program_guard(G_program, fluid.default_startup_program()):

Z = fluid.layers.data(name='Z', shape=[Z_dim], dtype='float32')

c = fluid.layers.data(name='c', shape=[10], dtype='float32')

#合并输入

inputs = fluid.layers.concat(input=[Z, c], axis = 1)

G_sample = generator(inputs)

D_fake = discriminator(G_sample)

G_loss = 0.0 - fluid.layers.reduce_mean(fluid.layers.log(D_fake + 1e-8))

theta_G = ["GW1", "Gb1", "GW2", "Gb2"]

G_optimizer = fluid.optimizer.MomentumOptimizer(learning_rate=0.001, momentum=0.9)

G_optimizer.minimize(G_loss, parameter_list=theta_G)

#D优化程序

D_program = fluid.Program()

with fluid.program_guard(D_program, fluid.default_startup_program()):

Z = fluid.layers.data(name='Z', shape=[Z_dim], dtype='float32')

c = fluid.layers.data(name='c', shape=[10], dtype='float32')

X = fluid.layers.data(name='X', shape=[784], dtype='float32')

X = X * 0.5 + 0.5

inputs = fluid.layers.concat(input=[Z, c], axis = 1)

G_sample = generator(inputs)

D_real = discriminator(X)

D_fake = discriminator(G_sample)

D_loss = 0.0 - fluid.layers.reduce_mean(fluid.layers.log(D_real + 1e-8)

+ fluid.layers.log(1.0 - D_fake + 1e-8))

theta_D = ["DW1", "Db1", "DW2", "Db2"]

D_optimizer = fluid.optimizer.AdamOptimizer()

D_optimizer.minimize(D_loss, parameter_list=theta_D)

#Q优化程序

Q_program = fluid.Program()

with fluid.program_guard(Q_program, fluid.default_startup_program()):

Z = fluid.layers.data(name='Z', shape=[Z_dim], dtype='float32')

c = fluid.layers.data(name='c', shape=[10], dtype='float32')

inputs = fluid.layers.concat(input=[Z, c], axis = 1)

G_sample = generator(inputs)

Q_c_given_x = Q(G_sample)

#最小化熵

Q_loss = fluid.layers.reduce_mean(

0.0 - fluid.layers.reduce_sum(

fluid.layers.elementwise_mul(fluid.layers.log(Q_c_given_x + 1e-8), c), 1))

theta_Q = ["GW1", "Gb1", "GW2", "Gb2",

"QW1", "Qb1", "QW2", "Qb2"]

Q_optimizer = fluid.optimizer.AdamOptimizer()

Q_optimizer.minimize(Q_loss, parameter_list = theta_Q)

#Inference

Infer_program = fluid.Program()

with fluid.program_guard(Infer_program, fluid.default_startup_program()):

Z = fluid.layers.data(name='Z', shape=[Z_dim], dtype='float32')

c = fluid.layers.data(name='c', shape=[10], dtype='float32')

inputs = fluid.layers.concat(input=[Z, c], axis = 1)

G_sample = generator(inputs)

#读入数据,只载入训练集

paddle.dataset.common.DATA_HOME = './data/data65' #更改下载路径,使用提前保存好的MNIST数据集

train_reader = paddle.batch(

paddle.reader.shuffle(

paddle.dataset.mnist.train(), buf_size=500), #paddle.dataset.mnist.train()

batch_size=mb_size)

#Executor

exe = fluid.Executor(fluid.CPUPlace()) #CUDAPlace(0)

exe.run(program=fluid.default_startup_program())

it = 0

for _ in range(33):

for data in train_reader():

it += 1

#获取训练集图像

X_mb = [data[i][0] for i in range(mb_size)]

#生成噪声

Z_noise = sample_Z(mb_size, Z_dim)

c_noise = sample_c(mb_size)

feeding_withx= {"X" : np.array(X_mb).astype('float32'),

"Z" : np.array(Z_noise).astype('float32'),

"c" : np.array(c_noise).astype('float32')}

feeding = {"Z" : np.array(Z_noise).astype('float32'),

"c" : np.array(c_noise).astype('float32')}

#三层优化

D_loss_curr = exe.run(feed = feeding_withx, program = D_program, fetch_list = [D_loss])

G_loss_curr = exe.run(feed = feeding, program = G_program, fetch_list = [G_loss])

Q_loss_curr = exe.run(feed = feeding, program = Q_program, fetch_list = [Q_loss])

if it % 1000 == 0:

print(str(it) + ' | '

+ str (D_loss_curr[0][0]) + ' | '

+ str (G_loss_curr[0][0]) + ' | '

+ str (Q_loss_curr[0][0]))

if it % 10000 == 0:

#显示模型生成结果

Z_noise_ = sample_Z(mb_size, Z_dim)

idx1 = np.random.randint(0, 10)

idx2 = np.random.randint(0, 10)

idx3 = np.random.randint(0, 10)

idx4 = np.random.randint(0, 10)

c_noise_ = np.zeros([mb_size, 10])

c_noise_[range(8), idx1] = 1.0

c_noise_[range(8, 16), idx2] = 1.0

c_noise_[range(16, 24), idx3] = 1.0

c_noise_[range(24, 32), idx4] = 1.0

feeding_ = {"Z" : np.array(Z_noise_).astype('float32'),

"c" : np.array(c_noise_).astype('float32')}

samples = exe.run(feed = feeding_,

program = Infer_program,

fetch_list = [G_sample])

# 保存固化后用于infer的模型,方便后续使用

fluid.io.save_inference_model(dirname='freeze_model_momentum', executor=exe, feeded_var_names=['Z', 'c'], target_vars=[G_sample],main_program=Infer_program)

for i in range(32):

ax = plt.subplot(4, 8, 1 + i)

plt.axis('off')

ax.set_xticklabels([])

ax.set_yticklabels([])

ax.set_aspect('equal')

plt.imshow(np.reshape(samples[0][i], [28,28]), cmap='Greys_r')

plt.show()epoch = 50修改后

# 模型的定义以及训练,并在训练过程中展示模型生成的效果

%matplotlib inline

#让matplotlib的输出图像能够直接在notebook上显示

import paddle

import paddle.fluid as fluid

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.gridspec as gridspec

import os

#batch 的大小

mb_size = 32

#随机变量长度

Z_dim = 16

#生成随机变量

def sample_Z(m, n):

return np.random.uniform(-1., 1., size=[m, n])

def sample_c(m):

return np.random.multinomial(1, 10*[0.1], size=m)

#生成器模型

#由于aistudio环境限制,使用两层FC网络

def generator(inputs):

G_h1 = fluid.layers.fc(input = inputs,

size = 256,

act = "relu",

param_attr = fluid.ParamAttr(name="GW1",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Gb1",

initializer = fluid.initializer.Constant()))

G_prob = fluid.layers.fc(input = G_h1,

size = 784,

act = "sigmoid",

param_attr = fluid.ParamAttr(name="GW2",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Gb2",

initializer = fluid.initializer.Constant()))

return G_prob

#判别器模型

def discriminator(x):

D_h1 = fluid.layers.fc(input = x,

size = 128,

act = "relu",

param_attr = fluid.ParamAttr(name="DW1",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Db1",

initializer = fluid.initializer.Constant()))

D_logit = fluid.layers.fc(input = D_h1,

size = 1,

act = "sigmoid",

param_attr = fluid.ParamAttr(name="DW2",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Db2",

initializer = fluid.initializer.Constant()))

return D_logit

#c的概率近似(对于输入X)

def Q(x):

Q_h1 = fluid.layers.fc(input = x,

size = 128,

act = "relu",

param_attr = fluid.ParamAttr(name="QW1",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Qb1",

initializer = fluid.initializer.Constant()))

Q_prob = fluid.layers.fc(input = Q_h1,

size = 10,

act = "softmax",

param_attr = fluid.ParamAttr(name="QW2",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Qb2",

initializer = fluid.initializer.Constant()))

return Q_prob

#G优化程序

G_program = fluid.Program()

with fluid.program_guard(G_program, fluid.default_startup_program()):

Z = fluid.layers.data(name='Z', shape=[Z_dim], dtype='float32')

c = fluid.layers.data(name='c', shape=[10], dtype='float32')

#合并输入

inputs = fluid.layers.concat(input=[Z, c], axis = 1)

G_sample = generator(inputs)

D_fake = discriminator(G_sample)

G_loss = 0.0 - fluid.layers.reduce_mean(fluid.layers.log(D_fake + 1e-8))

theta_G = ["GW1", "Gb1", "GW2", "Gb2"]

G_optimizer = fluid.optimizer.AdamOptimizer()

G_optimizer.minimize(G_loss, parameter_list=theta_G)

#D优化程序

D_program = fluid.Program()

with fluid.program_guard(D_program, fluid.default_startup_program()):

Z = fluid.layers.data(name='Z', shape=[Z_dim], dtype='float32')

c = fluid.layers.data(name='c', shape=[10], dtype='float32')

X = fluid.layers.data(name='X', shape=[784], dtype='float32')

X = X * 0.5 + 0.5

inputs = fluid.layers.concat(input=[Z, c], axis = 1)

G_sample = generator(inputs)

D_real = discriminator(X)

D_fake = discriminator(G_sample)

D_loss = 0.0 - fluid.layers.reduce_mean(fluid.layers.log(D_real + 1e-8)

+ fluid.layers.log(1.0 - D_fake + 1e-8))

theta_D = ["DW1", "Db1", "DW2", "Db2"]

D_optimizer = fluid.optimizer.AdamOptimizer()

D_optimizer.minimize(D_loss, parameter_list=theta_D)

#Q优化程序

Q_program = fluid.Program()

with fluid.program_guard(Q_program, fluid.default_startup_program()):

Z = fluid.layers.data(name='Z', shape=[Z_dim], dtype='float32')

c = fluid.layers.data(name='c', shape=[10], dtype='float32')

inputs = fluid.layers.concat(input=[Z, c], axis = 1)

G_sample = generator(inputs)

Q_c_given_x = Q(G_sample)

#最小化熵

Q_loss = fluid.layers.reduce_mean(

0.0 - fluid.layers.reduce_sum(

fluid.layers.elementwise_mul(fluid.layers.log(Q_c_given_x + 1e-8), c), 1))

theta_Q = ["GW1", "Gb1", "GW2", "Gb2",

"QW1", "Qb1", "QW2", "Qb2"]

Q_optimizer = fluid.optimizer.AdamOptimizer()

Q_optimizer.minimize(Q_loss, parameter_list = theta_Q)

#Inference

Infer_program = fluid.Program()

with fluid.program_guard(Infer_program, fluid.default_startup_program()):

Z = fluid.layers.data(name='Z', shape=[Z_dim], dtype='float32')

c = fluid.layers.data(name='c', shape=[10], dtype='float32')

inputs = fluid.layers.concat(input=[Z, c], axis = 1)

G_sample = generator(inputs)

#读入数据,只载入训练集

paddle.dataset.common.DATA_HOME = './data/data65' #更改下载路径,使用提前保存好的MNIST数据集

train_reader = paddle.batch(

paddle.reader.shuffle(

paddle.dataset.mnist.train(), buf_size=500), #paddle.dataset.mnist.train()

batch_size=mb_size)

#Executor

exe = fluid.Executor(fluid.CPUPlace()) #CUDAPlace(0)

exe.run(program=fluid.default_startup_program())

it = 0

for _ in range(50):

for data in train_reader():

it += 1

#获取训练集图像

X_mb = [data[i][0] for i in range(mb_size)]

#生成噪声

Z_noise = sample_Z(mb_size, Z_dim)

c_noise = sample_c(mb_size)

feeding_withx= {"X" : np.array(X_mb).astype('float32'),

"Z" : np.array(Z_noise).astype('float32'),

"c" : np.array(c_noise).astype('float32')}

feeding = {"Z" : np.array(Z_noise).astype('float32'),

"c" : np.array(c_noise).astype('float32')}

#三层优化

D_loss_curr = exe.run(feed = feeding_withx, program = D_program, fetch_list = [D_loss])

G_loss_curr = exe.run(feed = feeding, program = G_program, fetch_list = [G_loss])

Q_loss_curr = exe.run(feed = feeding, program = Q_program, fetch_list = [Q_loss])

if it % 1000 == 0:

print(str(it) + ' | '

+ str (D_loss_curr[0][0]) + ' | '

+ str (G_loss_curr[0][0]) + ' | '

+ str (Q_loss_curr[0][0]))

if it % 10000 == 0:

#显示模型生成结果

Z_noise_ = sample_Z(mb_size, Z_dim)

idx1 = np.random.randint(0, 10)

idx2 = np.random.randint(0, 10)

idx3 = np.random.randint(0, 10)

idx4 = np.random.randint(0, 10)

c_noise_ = np.zeros([mb_size, 10])

c_noise_[range(8), idx1] = 1.0

c_noise_[range(8, 16), idx2] = 1.0

c_noise_[range(16, 24), idx3] = 1.0

c_noise_[range(24, 32), idx4] = 1.0

feeding_ = {"Z" : np.array(Z_noise_).astype('float32'),

"c" : np.array(c_noise_).astype('float32')}

samples = exe.run(feed = feeding_,

program = Infer_program,

fetch_list = [G_sample])

# 保存固化后用于infer的模型,方便后续使用

fluid.io.save_inference_model(dirname='freeze_model_epoch', executor=exe, feeded_var_names=['Z', 'c'], target_vars=[G_sample],main_program=Infer_program)

for i in range(32):

ax = plt.subplot(4, 8, 1 + i)

plt.axis('off')

ax.set_xticklabels([])

ax.set_yticklabels([])

ax.set_aspect('equal')

plt.imshow(np.reshape(samples[0][i], [28,28]), cmap='Greys_r')

plt.show()数据集修改后

# 模型的定义以及训练,并在训练过程中展示模型生成的效果

%matplotlib inline

#让matplotlib的输出图像能够直接在notebook上显示

import paddle

import paddle.fluid as fluid

import numpy as np

import matplotlib.pyplot as plt

import matplotlib.gridspec as gridspec

import os

#batch 的大小

mb_size = 32

#随机变量长度

Z_dim = 16

#生成随机变量

def sample_Z(m, n):

return np.random.uniform(-1., 1., size=[m, n])

def sample_c(m):

return np.random.multinomial(1, 10*[0.1], size=m)

#生成器模型

#由于aistudio环境限制,使用两层FC网络

def generator(inputs):

G_h1 = fluid.layers.fc(input = inputs,

size = 256,

act = "relu",

param_attr = fluid.ParamAttr(name="GW1",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Gb1",

initializer = fluid.initializer.Constant()))

G_prob = fluid.layers.fc(input = G_h1,

size = 784,

act = "sigmoid",

param_attr = fluid.ParamAttr(name="GW2",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Gb2",

initializer = fluid.initializer.Constant()))

return G_prob

#判别器模型

def discriminator(x):

D_h1 = fluid.layers.fc(input = x,

size = 128,

act = "relu",

param_attr = fluid.ParamAttr(name="DW1",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Db1",

initializer = fluid.initializer.Constant()))

D_logit = fluid.layers.fc(input = D_h1,

size = 1,

act = "sigmoid",

param_attr = fluid.ParamAttr(name="DW2",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Db2",

initializer = fluid.initializer.Constant()))

return D_logit

#c的概率近似(对于输入X)

def Q(x):

Q_h1 = fluid.layers.fc(input = x,

size = 128,

act = "relu",

param_attr = fluid.ParamAttr(name="QW1",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Qb1",

initializer = fluid.initializer.Constant()))

Q_prob = fluid.layers.fc(input = Q_h1,

size = 10,

act = "softmax",

param_attr = fluid.ParamAttr(name="QW2",

initializer = fluid.initializer.Xavier()),

bias_attr = fluid.ParamAttr(name="Qb2",

initializer = fluid.initializer.Constant()))

return Q_prob

#G优化程序

G_program = fluid.Program()

with fluid.program_guard(G_program, fluid.default_startup_program()):

Z = fluid.layers.data(name='Z', shape=[Z_dim], dtype='float32')

c = fluid.layers.data(name='c', shape=[10], dtype='float32')

#合并输入

inputs = fluid.layers.concat(input=[Z, c], axis = 1)

G_sample = generator(inputs)

D_fake = discriminator(G_sample)

G_loss = 0.0 - fluid.layers.reduce_mean(fluid.layers.log(D_fake + 1e-8))

theta_G = ["GW1", "Gb1", "GW2", "Gb2"]

G_optimizer = fluid.optimizer.AdamOptimizer()

G_optimizer.minimize(G_loss, parameter_list=theta_G)

#D优化程序

D_program = fluid.Program()

with fluid.program_guard(D_program, fluid.default_startup_program()):

Z = fluid.layers.data(name='Z', shape=[Z_dim], dtype='float32')

c = fluid.layers.data(name='c', shape=[10], dtype='float32')

X = fluid.layers.data(name='X', shape=[784], dtype='float32')

X = X * 0.5 + 0.5

inputs = fluid.layers.concat(input=[Z, c], axis = 1)

G_sample = generator(inputs)

D_real = discriminator(X)

D_fake = discriminator(G_sample)

D_loss = 0.0 - fluid.layers.reduce_mean(fluid.layers.log(D_real + 1e-8)

+ fluid.layers.log(1.0 - D_fake + 1e-8))

theta_D = ["DW1", "Db1", "DW2", "Db2"]

D_optimizer = fluid.optimizer.AdamOptimizer()

D_optimizer.minimize(D_loss, parameter_list=theta_D)

#Q优化程序

Q_program = fluid.Program()

with fluid.program_guard(Q_program, fluid.default_startup_program()):

Z = fluid.layers.data(name='Z', shape=[Z_dim], dtype='float32')

c = fluid.layers.data(name='c', shape=[10], dtype='float32')

inputs = fluid.layers.concat(input=[Z, c], axis = 1)

G_sample = generator(inputs)

Q_c_given_x = Q(G_sample)

#最小化熵

Q_loss = fluid.layers.reduce_mean(

0.0 - fluid.layers.reduce_sum(

fluid.layers.elementwise_mul(fluid.layers.log(Q_c_given_x + 1e-8), c), 1))

theta_Q = ["GW1", "Gb1", "GW2", "Gb2",

"QW1", "Qb1", "QW2", "Qb2"]

Q_optimizer = fluid.optimizer.AdamOptimizer()

Q_optimizer.minimize(Q_loss, parameter_list = theta_Q)

#Inference

Infer_program = fluid.Program()

with fluid.program_guard(Infer_program, fluid.default_startup_program()):

Z = fluid.layers.data(name='Z', shape=[Z_dim], dtype='float32')

c = fluid.layers.data(name='c', shape=[10], dtype='float32')

inputs = fluid.layers.concat(input=[Z, c], axis = 1)

G_sample = generator(inputs)

#读入数据,只载入训练集

paddle.dataset.common.DATA_HOME = './data/data4336' #更改下载路径,使用提前保存好的MNIST数据集

train_reader = paddle.batch(

paddle.reader.shuffle(

paddle.dataset.mnist.train(), buf_size=500), #paddle.dataset.mnist.train()

batch_size=mb_size)

#Executor

exe = fluid.Executor(fluid.CPUPlace()) #CUDAPlace(0)

exe.run(program=fluid.default_startup_program())

it = 0

for _ in range(33):

for data in train_reader():

it += 1

#获取训练集图像

X_mb = [data[i][0] for i in range(mb_size)]

#生成噪声

Z_noise = sample_Z(mb_size, Z_dim)

c_noise = sample_c(mb_size)

feeding_withx= {"X" : np.array(X_mb).astype('float32'),

"Z" : np.array(Z_noise).astype('float32'),

"c" : np.array(c_noise).astype('float32')}

feeding = {"Z" : np.array(Z_noise).astype('float32'),

"c" : np.array(c_noise).astype('float32')}

#三层优化

D_loss_curr = exe.run(feed = feeding_withx, program = D_program, fetch_list = [D_loss])

G_loss_curr = exe.run(feed = feeding, program = G_program, fetch_list = [G_loss])

Q_loss_curr = exe.run(feed = feeding, program = Q_program, fetch_list = [Q_loss])

if it % 1000 == 0:

print(str(it) + ' | '

+ str (D_loss_curr[0][0]) + ' | '

+ str (G_loss_curr[0][0]) + ' | '

+ str (Q_loss_curr[0][0]))

if it % 10000 == 0:

#显示模型生成结果

Z_noise_ = sample_Z(mb_size, Z_dim)

idx1 = np.random.randint(0, 10)

idx2 = np.random.randint(0, 10)

idx3 = np.random.randint(0, 10)

idx4 = np.random.randint(0, 10)

c_noise_ = np.zeros([mb_size, 10])

c_noise_[range(8), idx1] = 1.0

c_noise_[range(8, 16), idx2] = 1.0

c_noise_[range(16, 24), idx3] = 1.0

c_noise_[range(24, 32), idx4] = 1.0

feeding_ = {"Z" : np.array(Z_noise_).astype('float32'),

"c" : np.array(c_noise_).astype('float32')}

samples = exe.run(feed = feeding_,

program = Infer_program,

fetch_list = [G_sample])

# 保存固化后用于infer的模型,方便后续使用

fluid.io.save_inference_model(dirname='freeze_model_dataset', executor=exe, feeded_var_names=['Z', 'c'], target_vars=[G_sample],main_program=Infer_program)

for i in range(32):

ax = plt.subplot(4, 8, 1 + i)

plt.axis('off')

ax.set_xticklabels([])

ax.set_yticklabels([])

ax.set_aspect('equal')

plt.imshow(np.reshape(samples[0][i], [28,28]), cmap='Greys_r')

plt.show()运行

以上代码在本地运行可以多开,建议上课前一天提早跑好,因为cpu跑完一轮大概半个小时。

评论